Portfolio Details

Project information

- Category: Sensor Design

- Client: GIKI

- Project date: 01 July, 2024

- Project URL: 3d_lidar_camera_calib

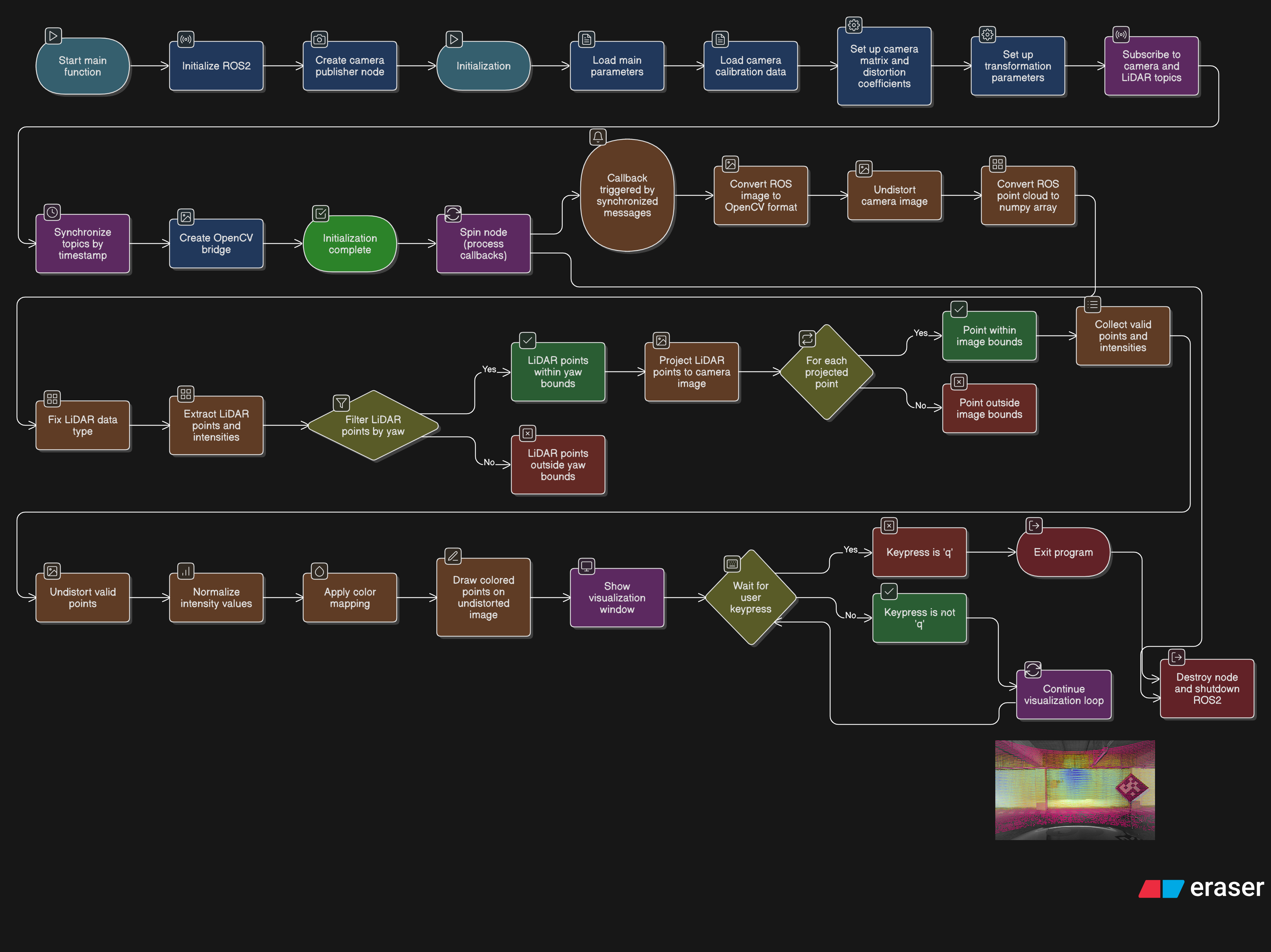

Camer LiDAR Fusion Visualization

This ROS2 Python node synchronizes data from a camera and a LiDAR sensor to project 3D LiDAR points onto a 2D camera image for visualization. It begins by loading camera intrinsic and distortion parameters from a YAML configuration file. Incoming grayscale images are undistorted using these parameters, and LiDAR PointCloud2 data is converted to a NumPy array. The node filters LiDAR points based on a ±60° yaw angle to focus on the front view. These selected points are then projected onto the image plane using camera extrinsic and intrinsic parameters. Only the points that fall within the image boundaries are retained, undistorted, and their intensity values are normalized. Finally, the points are visualized on the undistorted image using a rainbow colormap based on intensity, and the result is displayed in real time with OpenCV.